This is the done/not-doing change graveyard.

(Green is done. Yellow/Orange is in progress. Red is not started. Purple is declined.)

- (Replaced with stor2 backup server) I have doubled backup capacities across the board. Now there is 16TB of raw backup storage for the hot backups, 30TB of cold storage backups for the offsites, and 16TB for my own personal backups. I use separate backup disks for everything to keep the Synology NAS from becoming a single point of failure or filling up with duplicated data. This was a $700 investment, but I don't screw around with data durability, ever. The loss of data is basically a cardinal sin in my book.

- (Done!) I'm looking at doing full encryption for every single on-premises VM that I have. I currently use dm-crypt at the OS level for most things, but I also want to add a secondary layer via vCenter so every VM, regardless of dm-crypt or not, is protected by one extra security blanket. We just have to hope VMWare didn't build any backdoors into that proprietary encryption. :)

- (Done!) I've planned out what my bulk storage system looks like, above and beyond the NAS. I'm looking at an R730xd, with 12x3.5in bays, loaded with Seagate Exos X18 16TB drives in RAID 6, for a total usable capacity of 160TB. Probably running TrueNAS. 2x10Gb Mellanox X-3 NICs for iSCSI, 2x1Gb links for management. Another 1500VA UPS. 2x2TB SATA SSD cache disks in RAID1. This will be approx $5,500 though so this won't happen for a long time until it's needed. But good luck filling THAT up, lol.

- (Replaced with Broadcom 57810 NICs) I purchased a Mellanox ConnectX 3 10Gb NIC to try out. I might put it into ESXi1 to see how it performs, because ESXi1 currently has all the backup drives hanging off of it and it's stuck using regular gigabit ethernet to pull backup data. But then again, so is the NAS, so probably won't help anyway except when communicating to ESXi3. These NICs will be what I use to replace the ConnectX 2's that I have if and when I go for total redundant networking.

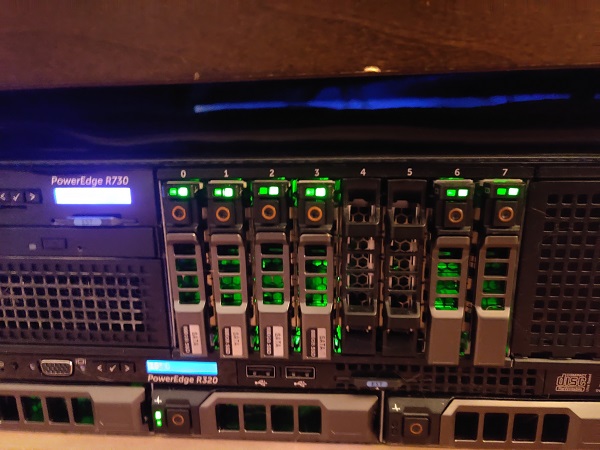

- (Done) I may buy two more Intel 800GB Enterprise SSDs to just fill out the front of my R730 and get that RAID5 capacity up to 4TB usable and get it over with. We're not low on capacity, just thinking ahead. This would necessitate a host reboot I believe. I'll announce that if needed.

- (Done!) I have additional testing to do around my OpenVPN links. Interestingly, the download speed through the links appears to be excellent, far above my 250mbps estimate. But the upload speed is subpar for some reason. I suspect MTU settings. Increasing the upload speed to be symmetrical to download speeds will allow the cache locking I implemented to be virtually un-noticeable. This is a test of one of the links:

root@pomf:/mnt/storage/pomf# speedtest

Speedtest by Ookla

Server: Grupo GTD - Miami, FL (id = 11515)

ISP: FranTech Solutions

Latency: 39.03 ms (0.59 ms jitter)

Download: 757.61 Mbps (data used: 1.3 GB )

Upload: 162.70 Mbps [====\ ] 23% ^C

- (Decision made) I still don't know how the Ramnode incident will play out, but this may necessitate improvements in provider resiliency. We'll see. I got escalated to a manager so maybe there's still hope. But I'm still thinking about how I intend to deal with this. I'm also trying to contact ECO about exclusively emailing me still. And the IWF still hasn't responded to my "we're on the same team bro" emails after refusing to talk to me. UPDATE: I'm gonna leave RamNode.

- (Done!) To prevent provider tomfoolery, I need to encrypt my cache drives for Pomf on the endpoints.

- (Done!) There are a few services not using HTTPS inside my OpenVPN tunnels, and that really should be rectified. Keys: Guac/Ghost

- (Done!) I had a great idea - for single-homed services like the Webring, Tracker, etc, I should really have a dedicated node that does NOT handle Pomf traffic so that all those services aren't bogged down by it. This will be a smaller BuyVM node in NYC with DDoS protection from PATH.

- (Done!) The seedbox will be re-located to my on-prem infra and rebuilt from the ground up. This is a requirement before dropping Ramnode.

- (Done in next maintenance window) I plan to isolate all VMs in my internal LAN from all other VMs except for specific rules. This is so if someone makes a wireguard tunnel into the protected network and THEY get hacked, it can't come back to bite me. Still looking at how to implement this. Probably moving all my services and firewall rules to 10.0.1.0/24 or something and then not bridging both /24's together, then setting NAT to /23.

- (Done!) I plan to work on a transparency reporting page for externally initiated takedowns/abuse reports. For content removal initiated by myself, I do not intend to provide notice, but all my policies apply to where nothing will be removed unless it is malware or illegal (e.g. CP). Due to liability and transparency concerns, I do not voluntarily moderate content except according to those two rules.

- (Done!) Implement LIDAR.

- (Done!) Implement SONAR.

- (Done!) Fix outbound gateway group redundancy broken in Pfsense upgrade. Possible default route issue alongside the Wireguard problem. This item should also be set aside for trying to make Pfsense as resilient as possible to outbound gateway disruptions.

- (Done in next maintenance window) Get to Debian 11 across the board.

- (Done!) Update the intra-tunnel ping check latencies and packet loss thresholds for warning/critical.

- (Done!) I need a Tor Browser VM inside my network to validate certain abuse reports.

- (Done!) Fix rewrite bug on Ghostbin (See: Kei Email)

- (Done!) Upgrade the witness script to re-add servers after some time, as well as failsafe if all servers are experiencing issues.

- (Done!) Redo the logrotate config on everything so we don't run out of disk space under extreme load.

- (Done!) Wowee, Xonotic updated! Guess I should update the server!

- (Done!) The Superpull system - A one stop shop for abuse report handling. Need to build. Have ideas. (With the conversion to cache validation with the slice ranges, this is actually complete!)

- (Done!) Improve Pomf monitoring to detect upstream failures.

- (Done!) Utilize cache slicing to deal with long initial cache load times.

- (Done!) Use 128G of flash disk to cache all the reads from the HDDs. Would help greatly with slice reads.

- (Done!) Test out Nginx socket sharding (reuseport). (Not sure if it helps...)

- (Done!) It is time for an internal wiki.