This will be a distinctly different kind of article from my usual writings, but it's a very important topic. I apologize if this one's a bit too stuck up.

When we talk about infrastructure, it is very easy to look over the fact that people are intimately involved with it at all facets of it. For those experienced in either software development or infrastructure engineering, many times our first instinct when talking about the underlying technology is to completely isolate the technology from the users. Even if provisions are made for things like UI/UX, that's usually coming from a productivity standpoint, not an empathetic standpoint. Rather, what gets talked about is redundancy, failover, auto-scaling, load balancing, etc.

Take, for example, a system designed to improve warehouse management for a company, that needs to be up 24x7. You may have servers, network links, DNS stuff to look at, users to accommodate, etc. Now, in the most dehumanized scenario, users can be viewed as replaceable, amorphous entities, slotted into a planned role hierarchy if all they do is repeatable, low skill tasks in this proposed system (e.g. move this box over there). It's a very capitalistic way of thinking about it, but this can happen at scale.

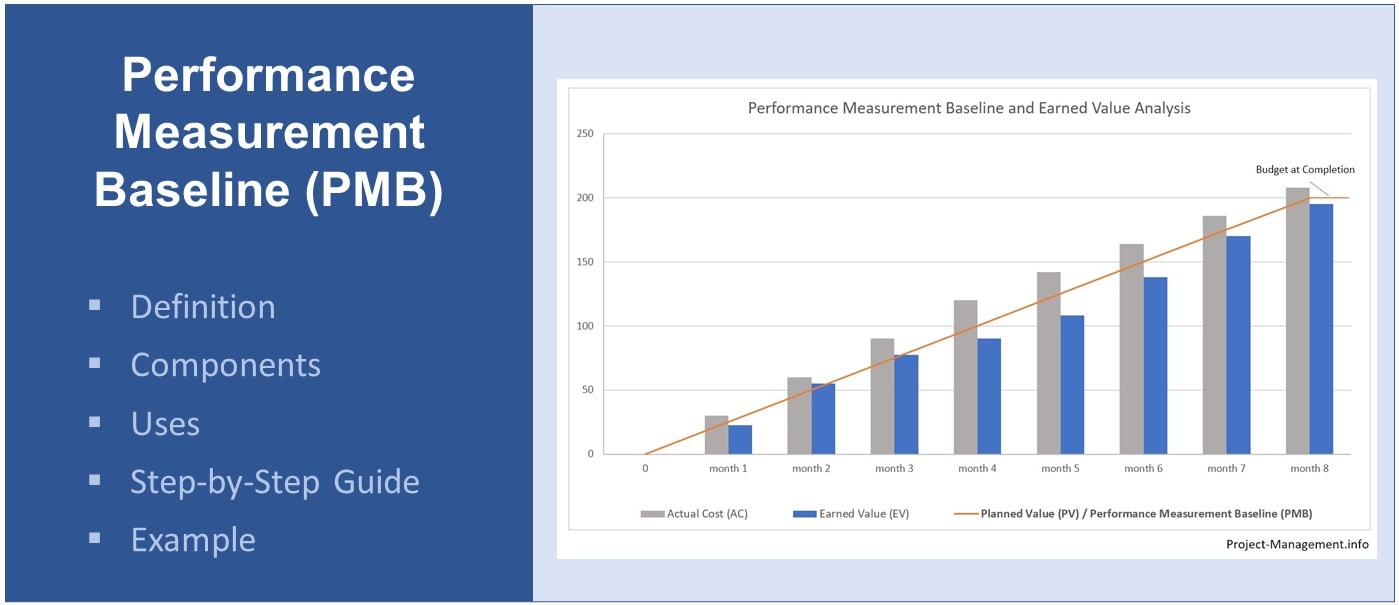

Look at warehouse workers tasked on moving things around for a giant company such as Amazon. Workers are treated as if were components of a machine, and if that component performs under established baselines, it is replaced. The metrics drive the workers, no matter how inhuman they are, or what the worker has to do to stay afloat. Perform, or die. The programmers that make these things may never think about the ramifications of their little baselining system. They are not actually the ones doing the work that their system is interacting with. And what's worse - they may adjust the targets based on the data they're getting. Remember - when a metric becomes a baseline, it stops being a good metric.

This scenario breaks down a little bit when a worker has dependencies placed upon them, or if they generate those dependencies. A great example of a dependency is knowledge of an undocumented system, or acting as a subject matter expert for a set of business critical components. The worker gains value if they are not replaceable or if they perform above baselines, because now they have value above and beyond the pigeon hole they were placed in. If this continues, the worker transcends not as a cog in a machine but instead as the lynchpin of an operation, especially when the cost of losing the worker far exceeds their salary.This is the only time capitalism will actually treat a worker fairly, because the company has something to lose by not doing so.

However, from a risk perspective, this is not good. If that worker DOES go, whether it be to another company or the great beyond, the impact could be severe. IT professionals call this The Bus Factor, which is another wonderful dehumanization of workers - How many of your IT employees can get hit by a bus before there are significant skill gaps? How long can you go without those employees before your operations collapse? (How many times can you call it The Bus Factor before you realize the death of your colleagues is a shit topic when framed as a "business risk"?)

Now, why the hell am I talking about capitalism and worker's rights? Well, we need to take this to the most logical extreme to make sense: Me! I am the sole owner and operator of literally everything here. The bus factor of Lain.la is 1. Now, I'm probably one of the rare few sysadmins that not only work in the field of technology, but also doesn't HATE technology when I get home. Most people start tech as a hobby, then move into doing it as a job, leaving the hobby behind because the last thing they want to see after a 40 hour work week is more tech. It reminds them of work, and so it's no longer fun. This also means that the people doing tech as a hobby are notoriously bad at it.

Note: Even I feel the effects of this sometimes though, which is why things don't get done quite as fast as I'd like, but I also made my systems to be as pain-free as possible without compromising reliability and security. The closest I've come to onboarding some backup was actually paying someone to write some custom code around a separate system, and that did not require any additional access at all to accomplish, and I keep mentioning it all over the place because it feels like I gave up, even though in reality that is not the case.

So, what happens if I get hit by a bus? Who will know? What happens to my systems, code, and servers? Things would probably run for some time since I pay everything for over a year in advance, but the physical systems may not last as long with nobody paying the bills. Power gets cut from lack of payment, and that's it. Someone shows up to pick up the pieces after my death and unplugs the servers? That's it too.

I engineer many, many components to be redundant, but overlook myself as the primary single point of failure. What's worse, is I can't just hire someone because A. I don't trust anybody, B. I don't have a budget to do that, and C. that doesn't solve the home servers problem without literally inviting someone into my home. Is this an issue right now? No. Is this an issue in a month? So long as I don't kick the bucket. Is this an issue for the next five years? Yes. If I travel and something fails, it's not getting fixed until I'm back. If I get tired of running all this, then that's it. If something happens to my financial situation, this project is definitely not in the "must have to live" category. If I need to renovate, move, or so much as replace my carpeting, those gorgeous uptime values are going into the ground while I shuffle everything around.

So what will I do about it? Well, for now, nothing. There is the argument that I could shift literally everything into VPSes and then so long as the bill is paid it'd run for a long time, but I have *big* plans that are not cost-compatible with that, and I like playing with hardware (and ensuring data custody) a lot. I could co-locate all my systems, but then I have to get into buying committed bandwidth from a datacenter, and then if something fails I have to drive however long to deal with it, plus the exorbitant cost and now you're trusting random people in a shared environment.

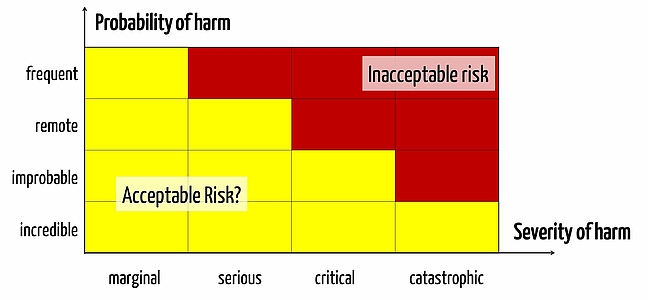

I could try to find some volunteers, but that is a security and reliability nightmare. I don't want anyone in my systems. I'm a firm believer in the benevolent dictator concept - so long as you have vision and execution. It's worked well here after all. So this leaves me at... no solution at all. In the security field, this is known as "Risk Acceptance", and it's all we've got to go on. I could fall back to the cop-out argument of "this shit is free so get bent if it goes down" but that's not how I like to operate and not how you build a reputation either.

I hope this helps illustrate that running systems is not all just programming and web servers. It is easier to separate the technology from the human aspect, but this separation is simply not feasible in most scenarios that aren't just crippling corporate applications. Please also note - This article is not meant to act as a "I'm getting tired of Lain.la" article either - this is absolutely not the case. It's just food for thought as to why self-hosted services are a notorious revolving door, and as a general musing on the state of infrastructure engineering.

-7666