Hello dear reader! I come to you another thousand miles away from the datacenter, to write an article to clarify some nuances around how I handle terrorist content on Pomf. Terrorist content can be defined as any video or material related to a domestic, international, extremist or other terrorist incident or organization, such as a recording of it or a propaganda video.

Recently, I have been getting correspondence from the Terrorist Content Analytics Platform (TCAP), which is a newcomer to the terrorist material reporting "scene". I have been very impressed with their hard work. Their initial email to me, when they started up their service a few months ago on my site, contained roughly 70 positive hits for Islamic terror propaganda videos that had made their way onto Pomf, to which I promptly removed and passed along a "thank you". However, their second email contained "only" domestic terrorist videos, such as the Christchurch shooting recording. I denied this request. You may ask yourself, "what is the difference between the two?". I will try to go into detail here as to my reasoning from multiple angles - legal liability, intent, and historical value.

Operational Background:

As a refresher, Lain.la and all of its services are hosted in the United States. All data resides there, and that means all data is subject to US law. No other jurisdiction has authority over it, but due to the nature of the internet, it doesn't mean that data isn't served outside the US (in fact, a lot of traffic absolutely goes outside the US). This means that while I can tell other countries to pound sand, they also reserve the right to block or limit access to my services (which is fine by me - sufficiently savvy users can evade these rudimentary blocks as they're usually at the DNS level).

I will, as a matter of strict policy - only accept takedowns from third parties when that material breaks a US law, such as the DMCA. If it doesn't break a law, it stays. Internal takedowns (e.g. initiated by me) have less stringent policies, but I still generally only remove things based on my existing rules (Usually this is just proactive investigations into illegal file sources). Some exceptions to this may be service abuse such as pushing 6Gbps of a single set of porn files, or me blacklisting a site from using Pomf as backhaul for their ad-supported service, in violation of the ToS. Also, if the original uploader can prove that they own the file they uploaded, they can request a takedown of their own material. Other than that, my service is truly net neutral, as it should be. I also have a transparency page where you can see all of the incidents or reports that led to a takedown on Pomf here.

Legal Liability:

Now, liability for hosting these things gets interesting. Per Section 230 of the Communications Decency Act, I'm not responsible for the hosting of user generated content. I also certainly don't endorse user generated content, and I don't direct users to it via some algorithm. A user is always the one who uploaded it, and that user then distributed it (as the links are private and not brute-forceable), and that makes me not liable even if the file is coming from my services. You may also note that the very first line in my terms of services says "The providers ("we", "us", "our") of this web site and all its provider/owner-operated subdomains ("Service") are not responsible for any user-generated content ("Content") and accounts.". With all of this in mind, however, if the file is brought to my attention via an abuse report, then I must make a decision whether or not to keep it, and one could argue that the decision there can either be a condoning or condemning of the file. However, I counter with this: My decision is strictly based in law and a reasonable interpretation of that law, therefore, I am not making a personal decision on the file, I am simply gauging the report's veracity against the current legal environment and determining the file's status from there. At worst, I may err on the side of caution because I am not a lawyer.

For an argument in favor of removal, let's dive a little deeper into the legality of the material itself. There are a few laws I want to bring up here:

- The EU Terrorist Content Online Regulation

- The UK Terrorist Act of 2006

- The US's Definition of "Conspiring to provide material support to a foreign terrorist organization".

So, we can generally write off the UK and EU simply because my data isn't there, but I wanted to bring it up anyway as examples of stronger legislation specifically around publishing the content. As you can see from those two links, simply providing the content for consumption is against the law. In the US, it is only illegal to provide that content if it aids and abets a foreign terrorist organization such as ISIS. This particular case from just last month is exactly the kind of case law that proves this occurs, and that I incur significant legal liability if I leave that material up. Therefore, it gets removed, as the evidence in favor of liability is stronger than not.

Now, when we look at the publishing of videos related to domestic terror incidents not tied to a specific foreign organization, that's when the US's definition falls apart. Isolated incidents of shootings tend to not be part of any major organizational effort to destabilize or harm the United States. You may argue that some incidents fall under the flag of white supremacy, but there are only a few organizations that would count as terrorist organizations here in that category today, and the videos of these incidents reported to me have no propaganda branding whatsoever. If someone posted a shooting with a ton of Atomwaffen logos and endorsements of a shooting event, this would absolutely count for removal. However, a raw video? Propaganda, this does not make.

Intent:

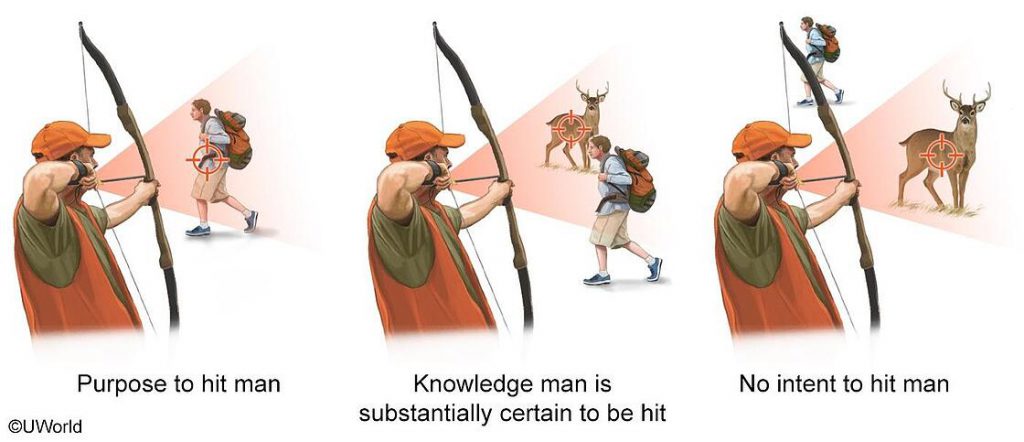

My previous point provides an excellent segue into my next major topic: Intent. What was the original purpose of the uploading and subsequent sharing of the video? Was it for archival purposes? Was it to push an ideology? Was it to show the world what evil looks like? Since I don't collect this information I can't say for sure, so we only have the content of the video and where it was shared as our data points in this topic to speculate off of. Usually, if a video has no branding on it and is just a raw video of an event, we can safely say this is just raw footage and it has no innate ability to convince anyone of anything except how horrible people can be. However, if we see that little ISIS flag in the top right and a whole bunch of front line footage showing potential victories of the Islamic State, then yes, we can safety say that this was a video meant to demonstrate ISIS's ideology and their execution of that ideology, and into the bin it goes.

Note: This next section does involve my personal opinions, and can be regarded as speculation, or additional contextual information into my beliefs.

Historical Value:

Critically, Pomf is an archive. Files don't get deleted, period, outside of the reasons stated above. I have storage for the next three years to keep that up, even at our current growth rate. It is my opinion that the destruction of data, especially around critical historical events, is a moral crime. Deleting raw videos of shootings or terrorist acts would prevent the preservation of these events for analysis and understanding. It would wipe them away from the history books. Some may argue that this is a good thing; that the perpetrators shouldn't get their own Wikipedia article, because that's typically what they're trying to gain (notoriety). I tend to agree, but it's nowhere near my fault that the fetishization of a mass shooter occurs. This is the fault of the media, and the failure of the countries like the United States to properly combat the root causes of these incidents in the first place.

In conclusion, I remove terrorist material only when it opens me to significant legal liability, and this is usually in relation to foreign terrorist organizations. Documents and recordings in relation to domestic terrorism, or terrorism not aligned to a foreign (or even domestic) terrorist organization, remain on Pomf per my policies.