It is no secret that I spend a lot of time (and money) on Lain.la to make sure it runs better than just about any other collection of freebie services. While the services themselves are generally par for the course, its the infrastructure where I like to shine. This means continually reviewing and improving things as load scales up to account for possible risks to infrastructure failure and balancing those risks against their cost. I try to account for just about everything - storms, power outages, stray backhoes. You name it - I've probably thought of it.

The following is a list of interesting projects to improve failure tolerance that I have planned out, along with their estimated cost, and a reason as to why I want to do them but haven't done so yet. The ideas scale from "simple (not really)" to "stupid, as in way overkill".

Project A: Completely Redundant Networking

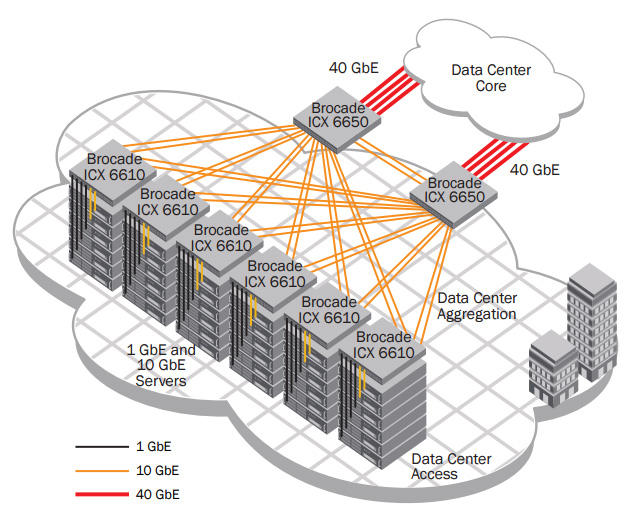

I wrote in an earlier post that I bought a cold spare Brocade ICX6610 switch because I feared if I lost the switch I'd be down for literal days. This was triggered by a spurious switch crash that I still have no explanation for. The idea here is simple. If I lose a switch, I should not lose the whole network. So, I would integrate the new switch directly into the system as a redundant pair. How would I do that? What happens if a switch with a server plugged into it fails? Ah, such questions. I have answers.

Each server would be getting a minimum of FOUR uplinks - two in each category, one to each switch, one from each NIC. The cable layout per server would look like this:

- 1GbE Management Network NIC1 -> icx1.lain.la

- 1GbE Management Network NIC2 -> icx2.lain.la

- 10GbE Host Comms Network NIC3 -> icx1.lain.la

- 10GbE Host Comms Network NIC4 -> icx2.lain.la

From a logical standpoint, this particular chart gives you a more graphical representation of the idea, give or take some variables.

This means that if any singular part of the networking fails, whether it be a NIC, a switch, a cable, a port, whatever - nothing will actually be down because there is always a second path to everything.

Now you may be asking yourself, "What about your WAN?". Yes, it's true this comes in on a single cable, and I have only one provider. However I have an answer for that.

- Gigabit Verizon FIOS -> 1GbE WAN NIC1 core.lain.la

- T-Mobile 4G LTE ISP -> 1GbE WAN NIC2 core.lain.la (https://www.t-mobile.com/isp)

- 1GbE LAN NIC3 -> icx1.lain.la

- 1GbE LAN NIC4 -> icx2.lain.la

Magical, isn't it? You can get ISP services over 4G LTE! Yes the latency would be trash, but that's fine. It's better than nothing. And it's cheaper than traditional coaxial cable and gets way better upload speeds.

So, why haven't I done this yet? Here's the answers:

- I estimate a capital cost of at least $250 for all the extra NICs and cables and an operational cost of $60/mo (T-Mobile is $50/mo and power for all this extra stuff would be $10/mo) that I'm not willing to pay at this time.

- I estimate significant downtime to ripping out ALL the networking here. I can shift VMs around as I please but testing and implementation will be quite disruptive.

- I estimate this will take at least 20 hours of planning, wiring, testing, implementing, documenting, etc. That's a significant investment.

Project B: Massive Storage Upgrades

My storage architecture I've been simultaneously happy and disappointed with. It's great because I have plenty on hand and it runs like a dream. It's disappointing though because it is NOT redundant (I don't mean RAID, I mean, for example, server, RAID card, or chassis failure) and if a server were to blow up, there would be no way to get those machines to a new server without restoring a backup or hot-attaching the dead drive's datastores. So, here's my plan to fix this:

Two new storage servers - One for fast storage, one for regular speed storage.

Server 1 (Fast):

- Poweredge R730xd 24 2.5in bay storage server as a chassis OR used SAN

- At least 2 6 core CPUs or better, 3GHz preferably for single thread performance, reasonable TDP for power savings

- 64-128GB DDR4 RAM for a hot cache

- 8-24 1.6TB SAS 12Gb/s Flash storage drives, in 3x8 seperate RAID5 arrays. 33.6TB usable (I can buy this one array at a time to save money provided the availability of drives if need be)

- QSFP 40Gbit/s iSCSI connection to icx1 and icx2 for ridiculously fast transfers to multiple hosts simultaneously

Server 2 (Regular):

- Poweredge R730xd 12 bay 3.5in storage server OR, preferably - Synology NAS with 2 PSUs and 12 3.5in bays as a chassis

- At least 2 6 core CPUs or better, 3GHz preferably for single thread performance, reasonable TDP for power savings

- 32-64GB DDR4 RAM for a hot cache

- 12x 10TB Enterprise SAS/SATA HDDs (WD Red Pro or better) in single RAID6 array (100TB usable)

- 10GbE iSCSI connection to icx1 and icx2 for fast throughput

The benefit of having unified storage is that if a server fails, VMWare HA can just boot the VM on another server automatically because the storage is disconnected from the server. Usually storage systems like this also have multiple redundant paths to the storage, so if a power supply, controller canister, drive, or NIC fails, you're not down. Also - this basically triples my capacity and storage speed. I would have to update the backups system though, but I can just attach a crap ton of external drives to an existing server and call it a day there.

So, why haven't I done this yet? Here's the answer:

- I estimate the capital cost of this project to be at least $8,000 and an operational cost of $75/mo for power and summer cooling. This is shockingly expensive, but it makes sense. I would never need storage upgrades for the next ten YEARS by my calculations with this proposal.

- Downtime would be basically zero since all of this is plug and play with the existing infrastructure and the vMotion capabilities I already have.

- I estimate that this project would take 20 hours of configuration and implementation. I also have some research to do regarding 40Gbit networking and QSFP adapters.

Project C: Complete Off-Grid Power Solution

My resiliency against power loss right now is pretty good. Power stability in my neck of the woods is above average, and even if there is a grid failure, I have 5 seperate UPS systems and a generator I can kick in. However, this is not perfect. If there is a perfect storm (ha.) of events I am screwed. The generator is manual, and the UPSes have a combined runtime of about 30 minutes. If I'm away from home, I have 30 minutes from the power event email to run back home and cut the power over to the generator. This has never happened thankfully, but it can and that scares me. The solution? Cut the grid out entirely.

Proposal A:

Since I own my land and home, I can do whatever I wish with it. Therefore I would put in a 12kW solar system on my property with batteries that would run the place for at least 24 hours at normal load (my estimation - 40kWh of batteries or better). This would not only provide complete off grid power generation and storage, but completely cut my power bill and even make it a revenue generating system.

What would this cost? A lot. I mean, we're talking at least $40,000 in parts and labor here for complete power independence and maybe a savings of $300-400/mo. It's a huge investment and would take hundreds of hours of time and planning. But, it is the best option as far as everything goes and means power would no longer be a concern.

Proposal B:

A simple home propane generator would solve the power independence problem without any of the cost savings. This would be extremely cheap compared to the above option - maybe $4,000-$5,000 for a sizeable generator (15kW) with an automatic transfer switch from a company like Generac, plus installation cost. I already have a massive 500 gallon tank of propane on site that could run it for days and days. The only problem would be that there is no revenue generation, plus it's not quite as clean or "free" as solar, as I'd need to pay for the propane that it burns if there was an extended outage.

There are other smaller projects that I have planned but these don't really deserve their own mention, they're just housekeeping or even more "pie-in-the-sky" dreams. But, I hope this article shows you how dedicated I am to this project and how even if the services I run are run-of-the-mill, the guy behind it ain't. :)