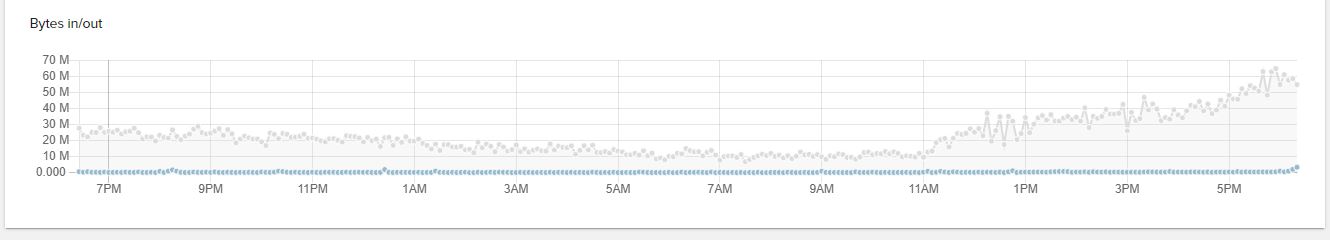

Recently it has come to my attention that a certain Reddit poster has decided to use my pomf clone to host their reuploads of people's deaths. This is... unusual to say the least. However, it did require me to scale my infrastructure up immediately to handle the load. A constant outbound demand of 50-200mbit (UPDATE: IT IS SO MUCH WORSE THAN THAT SEE IMAGE) is nothing to sneeze at, and my provider's bandwidth caps for outgoing data start getting expensive. Reddit demand sucks.

I already planned to do this scaling as a measure of redundancy for lain.la, but instead I'm using it as a cost saving measure. I get 10TB of free outbound data with the main lain.la endpoint, which is tied to an OpenVPN tunnel. Bandwidth outbound from, say, Pomf, goes through pfSense, then out to lain.la, then out to the user. That's where I get charged, if I breach 10TB.

The reddit traffic, when extrapolated, was looking to be something on the order of 30TB if it remains constant. So I needed to move this project up immediately to prevent overage charges. Therefore, the project is now done and I can document what I did. It's... hackier than I would have liked. But it works really well.

Alt.Lain.La

I deployed a new Ramnode instance and I internally name this "alt.lain.la". This server sits in Atlanta, GA, in a seperate datacenter from lain.la (NYC). This ensures geographical redundancy. This machine is configured identically to lain.la - same nginx configurations, same openvpn configurations, same firewall routing, same everything, EXCEPT everything is "10.9.0.0/24" instead of "10.8.0.0/24" in the OpenVPN, firewall, and nginx configuration. This distinguishes the gateway and clients on alt.lain.la from lain.la.

OpenVPN

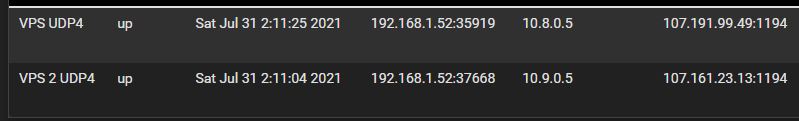

The mapping below shows how the two tunnels function from a logical level. 107.191.99.49 is lain.la, 107.161.23.13 is alt.lain.la.

Notes:

- Outbound traffic is pinned to a single endpoint to prevent round robin load balancing. This can cause weird connection problems. Failover is supposed to work with gateway groups but I haven't figured that out yet.

- Inbound traffic is moved from node to node via A record. Later I may explore A record round robin load balancing so it is truly redundant.

- I duplicated my NAT mappings from 10.8.0.1 to 10.9.0.1 in the pfSense NAT tables.

- Tracker.lain.church remains pinned to lain.la due to DNS.

- The seedbox remains pinned to lain.la because that's where the seedbox data lies.

What is excellent about this setup is that it is scalable. I just have to add more subnets and hosts and duplicate the configs. 10.10.0.0/24, 10.11.0.0/24, and so on. I can have a new host and another 10TB in bandwidth up inside about an hour, and then move DNS to it with no interruption. If A record round robin works, then I can geographically distribute load and equalize bandwidth consumption across the fleet of endpoint nodes. This means lain.la could scale to dozens of TB of bandwidth with not much problem.

A note after the fact:

I added a third node after the load got even worse. See the Reddit Pomf Incident article for more info.